Logistic Regression

Logistic regression is a type of supervised learning algorithm used for classification tasks in machine learning. It is a statistical method that analyzes a dataset in which there are one or more independent variables (also known as features) that determine an outcome or dependent variable (also known as target variable).

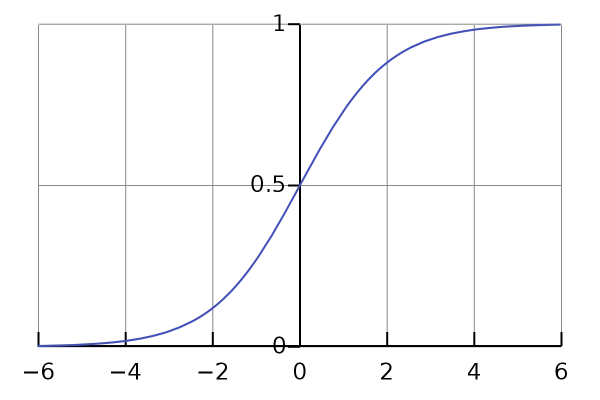

The logistic regression model works by first learning a set of weights for each input feature. These weights are used to calculate a score for each data point, which is then transformed into a probability score using the sigmoid function. The sigmoid function maps any input value to a value between 0 and 1, which can be interpreted as the probability of the data point belonging to the positive class.

Python Implementation

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_iris

# Load the iris dataset

iris = load_iris()

# Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2)

# Create a logistic regression object

logreg = LogisticRegression()

# Fit the model to the training

logreg.fit(X_train, y_train)

# Predict the classes of the test set

y_pred = logreg.predict(X_test)

# Print the accuracy of the model

print("Accuracy:", logreg.score(X_test, y_test))

Output:

Accuracy: 0.96666

In this example, we first load the iris dataset using the load_iris() function from scikit-learn. We then split the dataset into training and testing sets using train_test_split() function. We create a logistic regression object using LogisticRegression() and fit the model to the training data using fit() method. We then predict the classes of the test set using predict() method and calculate the accuracy of the model using score() method.

Advantages of Logistic Regression

Logistic regression is a powerful and widely used classification algorithm in machine learning, with several benefits, including:

-

Simplicity: Logistic regression is a simple and easy-to-understand algorithm, making it a popular choice for beginners and experts alike.

-

Fast training and prediction: Logistic regression can be trained quickly on large datasets with many input features, making it suitable for real-time applications.

-

Interpretable results: Logistic regression produces interpretable results that can be used to understand the relationship between input features and the target variable. This can help in making informed decisions.

-

Probabilistic output: Logistic regression outputs a probability score for each data point, providing a measure of uncertainty in the prediction. This can be useful in decision-making, risk assessment, and resource allocation.

-

Robustness: Logistic regression is robust to noise and missing data, making it suitable for datasets with missing values or noisy data.

-

Versatility: Logistic regression can be used for both binary and multi-class classification tasks. It can also be modified to handle non-linear decision boundaries using techniques such as kernel methods.

Limitations

Logistic regression is a powerful and widely used classification algorithm in machine learning, but it also has some limitations, including:

-

Linear decision boundary: Logistic regression assumes a linear decision boundary between the two classes. This means that it may not be suitable for classification tasks where the decision boundary is highly non-linear.

-

Sensitive to outliers: Logistic regression is sensitive to outliers in the data. Outliers can have a significant impact on the learned weights, leading to a less accurate model.

-

Multicollinearity: Logistic regression assumes that the input features are independent of each other. When there is multicollinearity (high correlation) among the input features, the model may not perform well.

-

Imbalanced classes: Logistic regression performs poorly when the classes are imbalanced, meaning that one class has significantly more data points than the other class. This can lead to a biased model that favors the majority class.

-

Limited to binary classification: Logistic regression is limited to binary classification tasks, where the target variable has only two classes. It cannot be used for multi-class classification tasks without modifications.

-

Non-probabilistic outputs: While logistic regression outputs a probability score for each data point, it does not provide a clear interpretation of the underlying probability distribution. This can make it difficult to interpret the model output and make informed decisions.

Advertisement

Advertisement