Activation Functions

Activation functions are a crucial part of any neural network as they introduce non-linearity into the model, which allows the network to approximate complex functions. Here, we will discuss several commonly used activation functions, their mathematical expressions, range, and applications.

- Sigmoid function

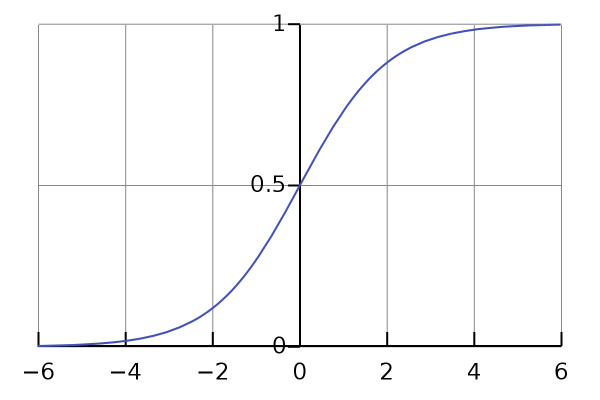

The sigmoid function is a widely used activation function that maps any real value to a value between 0 and 1. Its mathematical expression is:

f(x) = 1 / (1 + e-x)The range of the sigmoid function is between 0 and 1. The sigmoid function is used in neural networks for binary classification problems, where the output should be a probability between 0 and 1. However, it has some limitations, such as the vanishing gradient problem.

The curve of Sigmoid function is given below:

-

ReLU function

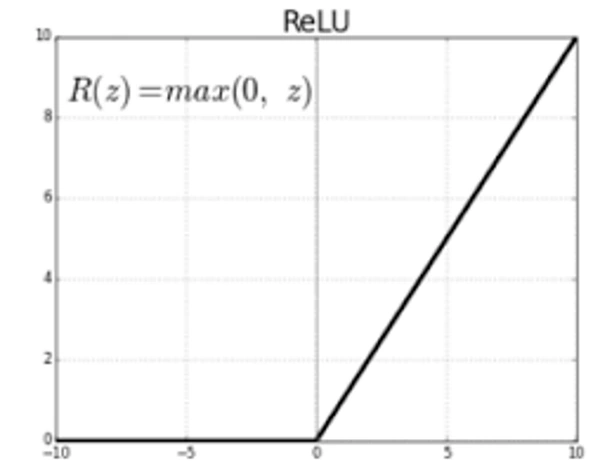

The ReLU (Rectified Linear Unit) function is another popular activation function that has been shown to perform well in many deep learning applications. Its mathematical expression is:

f(z) = max(0, z)The range of the ReLU function is between 0 and infinity. It is commonly used in deep learning models for its ability to reduce the vanishing gradient problem and its ability to learn quickly. However, it has some limitations, such as the "dying ReLU" problem, where the ReLU function can result in a neuron that is permanently inactive.

The Graph of ReLu function is given below:

-

Leaky ReLU function

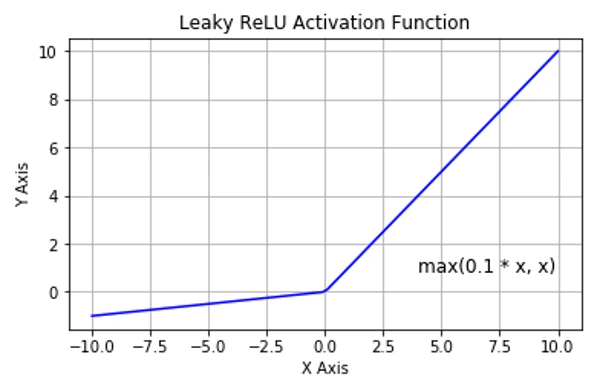

The Leaky ReLU function is a modified version of the ReLU function that aims to address the "dying ReLU" problem. Its mathematical expression is:

f(x) = { x, x > 0; 0.1x, x <= 0 }

The range of the Leaky ReLU function is between -infinity and infinity. It is used in deep learning models for its ability to reduce the vanishing gradient problem and address the "dying ReLU" problem.

- Tanh function

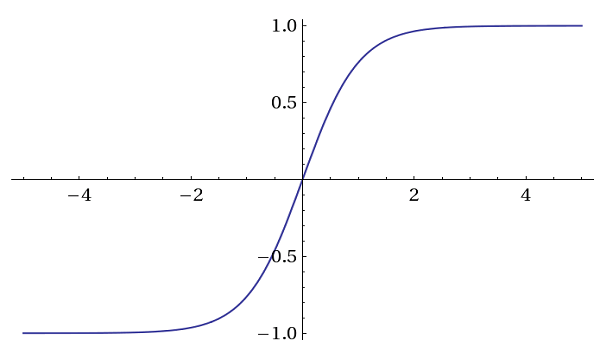

The Tanh (Hyperbolic Tangent) function is another popular activation function that maps any real value to a value between -1 and 1. Its mathematical expression is: f(x) = (ex - e-x) / (ex + e-x)The range of the Tanh function is between -1 and 1. It is used in neural networks for binary classification problems, where the output should be a probability between -1 and 1. However, it also suffers from the vanishing gradient problem.

-

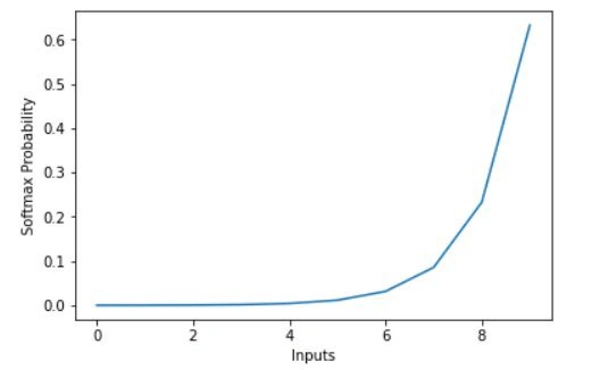

Softmax function

The Softmax function is used in the output layer of a neural network when the problem is a multiclass classification problem. The Softmax function takes as input a vector of real numbers and outputs a probability distribution over the classes. Its mathematical expression is:

f(x_i) = e(x_i) / sum(e(x_j)) for j = 1, ..., n

where n is the number of classes and x_i is the input for the i-th class.

The range of the Softmax function is between 0 and 1. The Softmax function is used in neural networks for multiclass classification problems, where the output should be a probability distribution over the classes.

The graph for softmax function is given below:

Thus the choice of activation function depends on the problem we are trying to solve and the characteristics of your data. The activation function is a crucial part of any neural network, and the selection of an appropriate function can have a significant impact on the performance of the network.

Advertisement

Advertisement